Interview with Margaret H. Hamilton

The following is an excerpt from a textbook called Fluency With Information Technology: Skills, Concepts, & Capabilities, 7th Edition ISBN 0-13-444872-3

At the time of publishing, the Wikipedia page for Margaret Hamilton has an important quote about the origin of the term “software engineering”. I was moved by this and wanted to add it to my /quotes page, but needed to verify the source of the quote. I was bothered that the source came from an interview given for an out-of-print textbook that as far as I can tell is unavailable to legally obtain. The interview is also not published in later editions of the book.

Fortunately, I was able to find the complete interview and I am publishing that excerpt here under fair use doctrine for educational, archival, and personal informational purposes only.

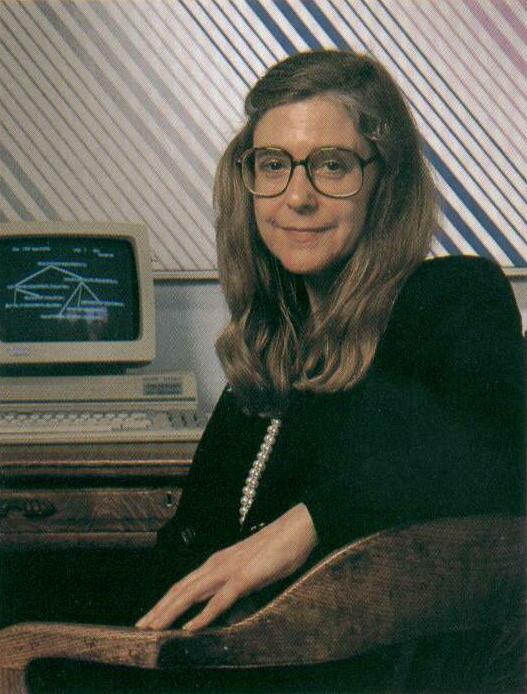

Margaret H. Hamilton is the founder and CEO of Hamilton Technologies, Inc. She is responsible for the development of the Universal Systems Language (USL) together with its integrated systems-to-software “development before the fact” life cycle, based on her mathematical theory of control for systems and software. Margaret was in charge of the Apollo (and Skylab) on-board flight software effort while Director of the Software Engineering Division at MIT’s Instrumentation Laboratory. She culminated this effort by leading her team in an empirical study of the Apollo on-board flight software development effort, resulting in formalizing lessons learned; serving as the origin and much of the foundation of USL.

You coined the term “software engineering.” What was it called before and why did you invent the term?

MHH: It helps to know what it was like back then for those of us building the Apollo on-board flight software. During the early days of Apollo, software was treated like a stepchild; it was not taken as seriously as other engineering disciplines. Considered a mysterious “black box”, it was regarded by many as an art, not a science. To me it was both. Understanding the subtleties of developing real-time asynchronous flight software was left up to the flight software’s systems- software experts. Having this kind of responsibility resulted in our creating a “field”, since there was no school at the time to learn “software engineering”. This necessitated our creating methods, standards, rules and tools for developing the flight software. We were always looking for new ways to prevent errors. When answers could not be found, we had to invent them as we went along.

Many requirements for the flight software were “thrown over the wall” from mission application experts to software engineering experts. To some mission experts, software “magically” appeared within the on- board flight computer, integrated and ready to go. As time went on, what had begun originally as mission requirements for the flight software became more and more understood by everyone in the form of the more application-oriented parts of the flight software, itself, that realized the mission requirements; and the mission expertise moved on from mission experts to software engineering experts. Requirements engineers and software engineers necessarily became interchangeable, as did their life-cycle phases— suggesting that a system is a system, no matter from which discipline things had originated. From this perspective, system design issues became one and the same as software engineering design issues. Yet, the systems-software part of the flight software was in a world all of its own!

“What is the difference,” I asked, “between what they are doing and what we are doing?” Knowing this, and the lack of understanding by many of what it took to create real world software-based systems and the part our software played within these systems, I wanted to give our software “legitimacy” so that it (and those building it) would be given its due respect; and, as a result I began to call what we were doing “software engineering” to distinguish it from other kinds of engineering; yet, treat each type of engineering as part of the overall systems engineering process. When I first came up with the term, no one had heard of it before, at least in our world. It was an ongoing joke for a long time. They liked to kid me about my radical ideas. It was a memorable day when one of the most respected hardware gurus explained to everyone in a meeting that he agreed with me that the process of building software should also be considered an engineering discipline, just like with hardware. Not because of his acceptance of the new “term” per se, but because we had earned his and the acceptance of the others in the room as being in an engineering field in its own right.

As Director of the Software Engineering Division at the MIT Instrumentation Laboratory, you were the leader of the team that built the software for the Apollo Guidance Computer (the computer used in NASA’s Apollo spacecraft that landed on the moon). What were the biggest challenges you faced building that system?

MHH: As the leader of the team, I was “in charge” of the people in the on-board flight software programming group (the “software engineering” team) and the on- board flight software itself. In addition to the software developed by our team, there were others whose code fell under our purview. “Outside” code could be submitted to our team from someone in another group(s) to become part of the official on-board flight software program (e.g., from an engineer in the navigation analysis group). Once submitted to our team for approval, code immediately fell under the supervision of our team; it was then “owned by” and updated by the software engineers to become part of, and integrated with, the rest of the software; and, as such, had to go through the strict rules required of all the on-board flight software; enforced and tested by the software engineers who were now in charge of that area of the software and the software areas related to it. This was to ensure that all the modules within the flight software including all aspects of the modules such as those related to timing, data and priority were completely integrated (meaning there would be no interface errors within, between and among all modules; both during development and during real- time).

The task at hand included developing the software for the Command Module (CM), the Lunar Module (LM) and the systems-software shared between and residing within both the CM and the LM; making sure there were no interface, integration or communication conflicts. Updates were continuously being submitted into the software from hundreds of people over time and over many releases for every mission (when software for one mission was often being worked on concurrently with software for other missions); making sure everything would play together and that the software would successfully interface to and work together with all the other systems including the hardware, peopleware and missionware for each mission.

The biggest challenge: the astronauts lives depended on our systems and software being man-rated. Not only did it have to WORK; it had to work the first time. Anything to do with the prevention of errors was top priority, both in development and during real-time where it was necessary to have the flexibility to detect anything unexpected and recover from it at any time and during all time in a real mission. To meet the challenge, the on-board flight software was developed with an ongoing, overarching focus on finding ways to capitalize on the asynchronous and distributed functionality of the system in order to perfect the more systems-oriented aspects of the flight software. Because of the flight software’s system-software’s error detection and recovery techniques that included its system-wide “kill and recompute” from a “safe place” restart approach to its snapshot and rollback techniques, the Display Interface Routines (AKA the priority displays) together with its man-in-the-loop capabilities were able to be created in order to have the capability to interrupt the astronauts’ normal mission displays with priority displays of critical alarms in case of an emergency. This depended on our assigning a unique priority to every process in the software in order to ensure that all of its events would take place in the correct order and at the right time relative to everything else that was going on. Steps earlier taken to create solutions within the multiprogramming environment had become a basis for solutions within a multiprocessing environment. Although only one process is actively executing at a given time in a multiprogramming environment, other processes in the same system—sleeping or waiting— exist in parallel with the executing process. With this as a backdrop, the priority display mechanisms of the flight software were created, essentially changing the man-machine interface between the astronauts and the on-board flight software from synchronous to asynchronous where the flight software and the astronauts became parallel processes.

The development and deployment of this functionality would not have been possible without an integrated system of systems (and teams) approach to systems reliability and the innovative contributions made by the other groups to support our systems-software team in making this become a reality. The hardware team at MIT changed their hardware and the mission planning team in Houston changed their astronaut procedures; both working closely with us to accommodate the priority displays for both the CM and the LM; for any kind of emergency and throughout any mission. In addition, the people at Mission Control were well prepared to know what to do should the astronauts be interrupted with priority alarm displays.

Since it was not possible (certainly not practical) for us to test our flight software by “flying” an actual mission, it was necessary to test it by developing and running it with a mix of hardware and digital simulations of every (and all aspects of an) Apollo mission which included man-in-the-loop simulations (with real or simulated human interaction) and variations of real or simulated hardware and their integration, in order to make sure that a complete mission from start to finish would behave exactly as expected.

The real test was Apollo 11 itself. On July 20, 1969, I was in the SCAMA room at MIT to support Mission Control during the moon landing when we first heard about the 1201 and 1202 priority alarm displays. I asked myself “why now, at the most crucial time of the mission”? I knew the “mission glue” and the error detection and recovery areas of the flight software’s systems-software by heart. This included the system- wide restarts and the priority displays (the area that provided the buffering between the flight software and the astronaut’s DSKY, created to warn the astronauts in case something went wrong). When the priority displays appeared on the DSKY, the astronaut knew that his normal mission displays were being interrupted and replaced by higher priority displays. It was an emergency! The particular priority displays that took place during the landing gave the astronauts a go/no- go decision—to land or not to land. The first 1201 alarm (followed by a 1202 alarm) took place less than 4 minutes before landing. The decision to keep going, or not, was made with little time to spare. It became clear that the software was not only informing everyone that there was a hardware related problem, but that the software was compensating for it and recovered from it in real time. For all of these reasons and more, the first moon landing was a success. Our computers and our software worked perfectly; the landing took place, and our code was on the moon. An explanation of what happened, and the steps taken by the on-board flight software to “continue on” to landing are briefly described in my letter to the editor, “Computer Got Loaded”, published in the March 1, 1971 issue of Datamation: https://medium.com/@verne/margaret-hamilton-the-engineer-who-took-the-apollo-to-the-moon-7d550c73d3fa#5140.

On Wikipedia, it states you used a special version of Assembly language to build the software for the Apollo Guidance Computer. If you were building this system from scratch today, what language would you use?

MHH: USL, for systems design and building the software. We learned from our ongoing analysis of Apollo and later efforts that the root problem with traditional languages and their environments is that they support users in “fixing wrong things up” rather than in “doing things in the right way in the first place”; that traditional systems are based on a curative paradigm, not a preventative one. It became clear that the characteristics of good design (and development) could be incorporated into a language for defining systems with built-in properties of control. This is how USL came about; and is about. Unlike traditional languages, USL inherently supports the user throughout the life cycle. Instead of looking for more ways to test for errors and continuing to test for errors late into the life cycle, errors are not allowed in the system in the first place, just by the way it is defined. More about this process can be found in the article “Universal Systems Language: Lessons Learned From Apollo,” at https://www.computer.org/csdl/mags/co/2008/12/mco2008120034.html

With USL, correctness is accomplished by the way a system is defined/constructed, having built-in language properties inherent in the grammar. With this approach, a definition models both its application (e.g., an avionics or cognitive system) and built-in properties of control into its own life cycle. Mathematical approaches are often known to be difficult to understand and limited in their use for nontrivial systems. Unlike other mathematically based formal methods, USL extends traditional mathematics with a unique concept of control. Its formalism is “hidden” by language mechanisms derived in terms of that formalism. Whereas most errors are found (if they are ever found) during the testing phase in traditional developments; with this approach, correct use of the language prevents (“eliminates”), errors before the fact. Much of what seems counter intuitive with traditional approaches becomes intuitive with a preventative paradigm: the more reliable a system, the higher the productivity in its development.